See more publications and researches in Fang-Lue Zhang's homepage.

Reference-based deep line art video colorization [IEEE Transactions on Visualization and Computer Graphics 2022, PDF]

Coloring line art images based on the colors of reference images is a crucial stage in animation production, which is time-consuming and tedious. This paper proposes a deep architecture to automatically color line art videos with the same color style as the given reference images. Our framework consists of a color transform network and a temporal refinement network based on 3U-net. The color transform network takes the target line art images as well as the line art and color images of the reference images as input and generates corresponding target color images. To cope with the large differences between each target line art image and the reference color images, we propose a distance attention layer that utilizes non-local similarity matching to determine the region correspondences between the target image and the reference images and transforms the local color information from the references to the target. To ensure global color style consistency, we further incorporate Adaptive Instance Normalization (AdaIN) with the transformation parameters obtained from a multiple-layer AdaIN that describes the global color style of the references extracted by an embedder network. The temporal refinement network learns spatiotemporal features through 3D convolutions to ensure the temporal color consistency of the results. Our model can achieve even better coloring results by fine-tuning the parameters with only a small number of samples when dealing with an animation of a new style. To evaluate our method, we build a line art coloring dataset. Experiments show that our method achieves the best performance on line art video coloring compared to the current state-of-the-art methods.Active Colorization for Cartoon Line Drawings [IEEE Transactions on Visualization and Computer Graphics 2020, PDF]

In the animation industry, the colorization of raw sketch images is a vitally important but very time-consuming task. This article focuses on providing a novel solution that semiautomatically colorizes a set of images using a single colorized reference image. Our method is able to provide coherent colors for regions that have similar semantics to those in the reference image. An active-learning-based framework is used to match local regions, followed by mixed-integer quadratic programming (MIQP) which considers the spatial contexts to further refine the matching results. We efficiently utilize user interactions to achieve high accuracy in the final colorized images. Experiments show that our method outperforms the current state-of-the-art deep learning-based colorization method in terms of color coherency with the reference image. The region matching framework could potentially be applied to other applications, such as color transfer.Deep Portrait Image Completion and Extrapolation [IEEE TIP 2019, download via arxiv]

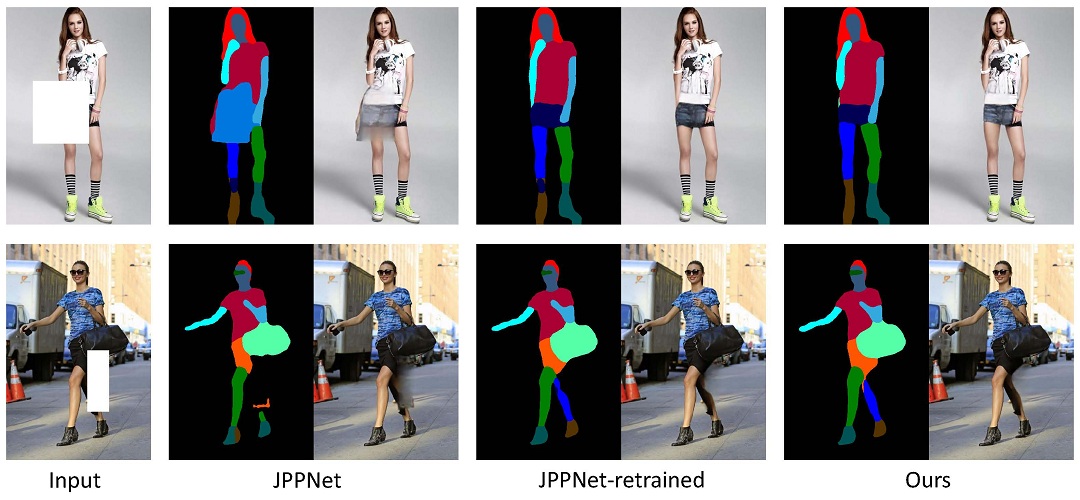

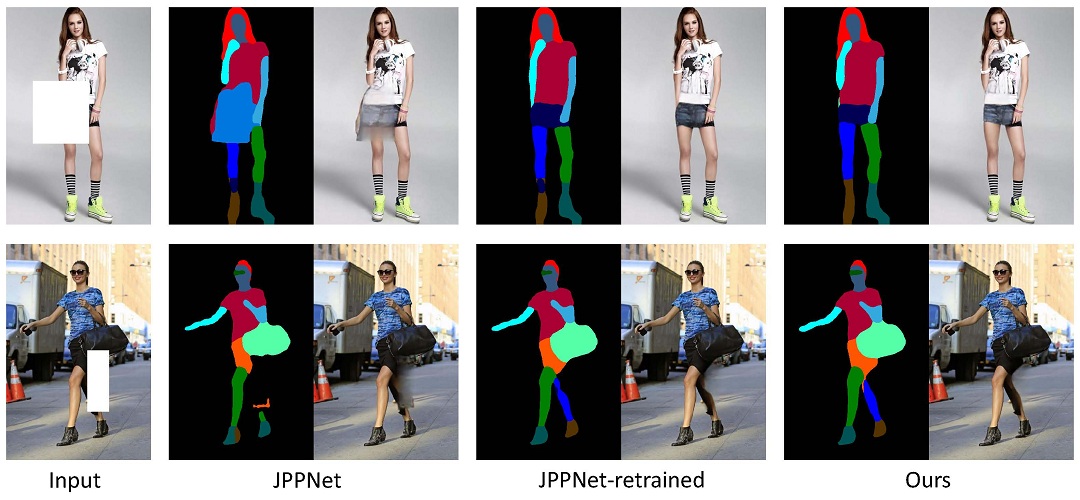

General image completion and extrapolation methods often fail on portrait images where parts of the human body need to be recovered - a task that requires accurate human body structure and appearance synthesis. We present a twostage deep learning framework for tacking this problem. In the first stage, given a portrait image with an incomplete human body, we extract a complete, coherent human body structure through a human parsing network, which focuses on structure recovery inside the unknown region with the help of pose estimation. In the second stage, we use an image completion network to fill the unknown region, guided by the structure map recovered in the first stage. For realistic synthesis the completion network is trained with both perceptual loss and conditional adversarial loss. We evaluate our method on public portrait image datasets, and show that it outperforms other state-of-art general image completion methods. Our method enables new portrait image editing applications such as occlusion removal and portrait extrapolation. We further show that the proposed general learning framework can be applied to other types of images, e.g. animal images.

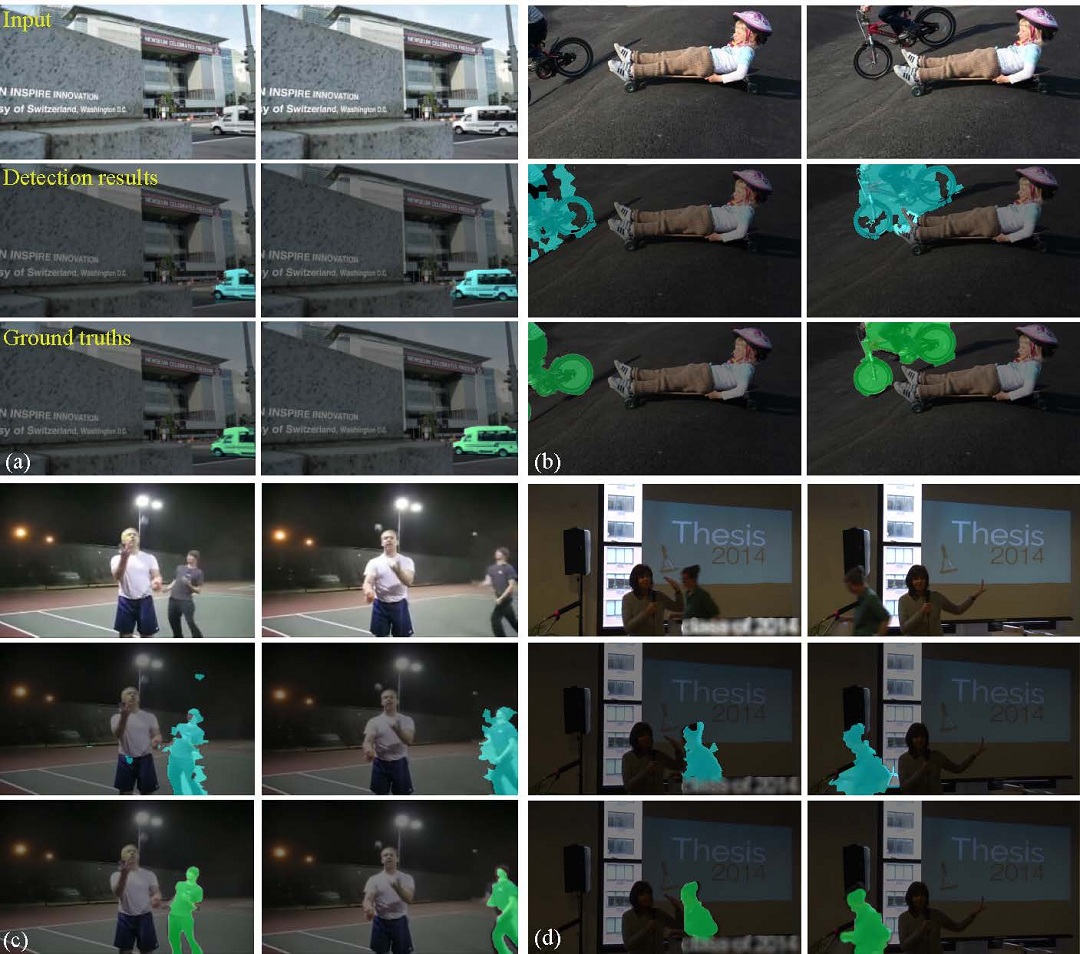

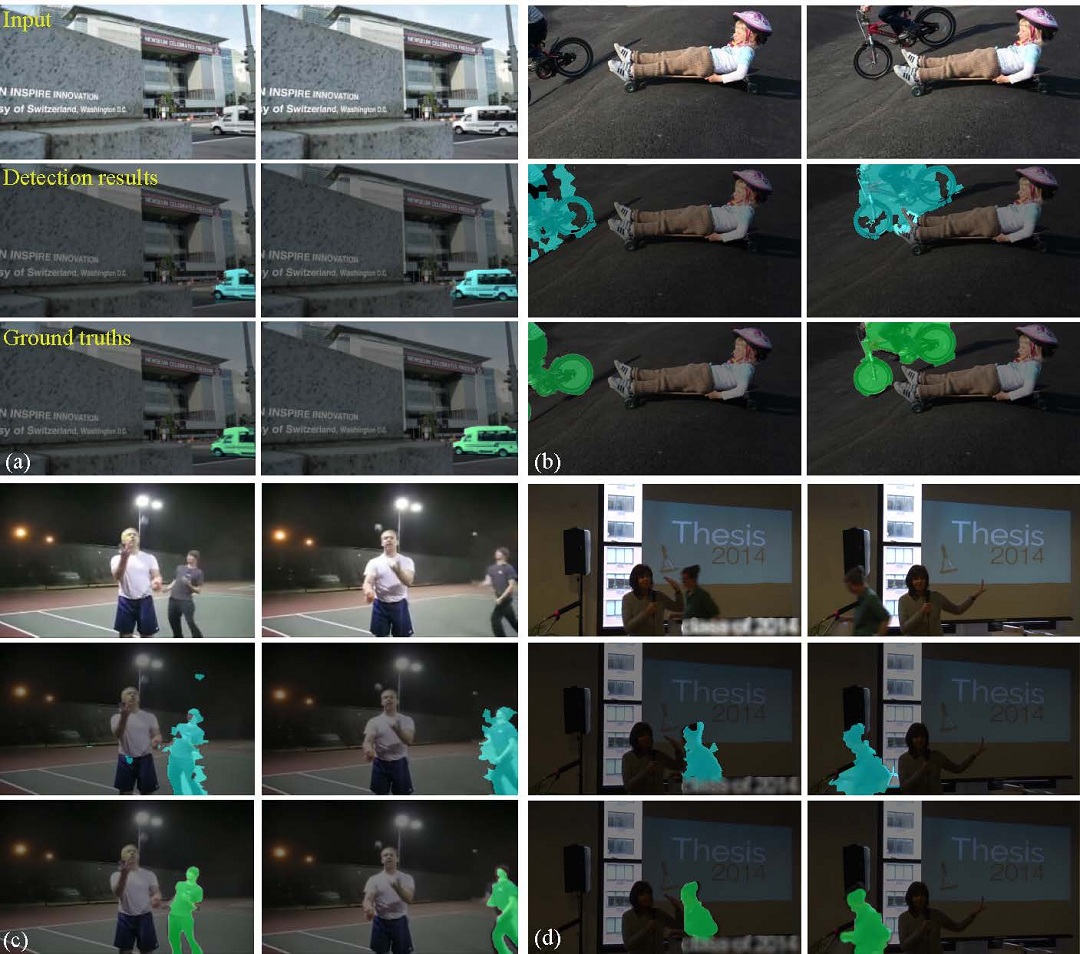

Detecting and Removing Visual Distractors for Video Aesthetic Enhancement [IEEE TMM 2018, download via IEEE]

Personal videos often contain visual distractors, which are objects that are accidentally captured that can distract viewers from focusing on the main subjects. We propose a method to automatically detect and localize these distractors through learning from a manually labeled dataset. To achieve spatially and temporally coherent detection, we propose extracting features at the Temporal-Superpixel (TSP) level using a traditional SVM-based learning framework. We also experiment with end-to-end learning using Convolutional Neural Networks (CNNs), which achieves slightly higher performance than other methods. The classification result is further refined in a post-processing step based on graph-cut optimization. Experimental results show that our method achieves an accuracy of 81% and a recall of 86%. We demonstrate several ways of removing the detected distractors to improve the video quality, including video hole filling; video frame replacement; and camera path re-planning. The user study results show that our method can significantly improve the aesthetic quality of videos.

| I | Attachment | Action | Size | Date | Who | Comment |

|---|---|---|---|---|---|---|

| |

MoreRes.jpg | manage | 313 K | 21 Apr 2019 - 16:35 | Main.fanglue | |

| |

parsing_comparison.jpg | manage | 161 K | 21 Apr 2019 - 16:35 | Main.fanglue |