See more publications and researches in Fang-Lue Zhang's homepage.

Rendering-Aware HDR Environment Map Prediction From A Single Image[AAAI 2022]

We present a two-stage deep learning-based method to predict an HDR environment map from a single narrow field-of-view LDR image. We first learn a hybrid parametric representation that sufficiently covers high-and low-frequency illumination components in the environment. Taking the estimated illuminations as the guidance, we build a generative adversarial network to synthesize an HDR environment map that enables realistic rendering effects. We specifically consider the rendering effect by supervising the networks using rendering losses in both stages, on the predicted environment map as well as the hybrid illumination representation.Optimal Pose Guided Redirected Walking with Pose Score Precomputation [2022 IEEE Conference on Virtual Reality and 3D User Interfaces (IEEE VR) ]

Redirected walking (RDW) aims to reduce collisions in the physical space for VR applications. However, most of the previous RDW methods do not consider future possibilities of collisions after imperceptibly redirecting users. In this paper, we combine the subtle RDW methods and reset strategy in our method design and propose a novel solution for RDW that can make better use of physical space and trigger fewer resets. The key idea of our method is to discretize the representation of possible user positions and orientations by a series of standard poses and rate them based on the possibility of hitting obstacles of their reachable poses. A transfer path algorithm is proposed to measure the accessibility among standard poses and is used to support the calculation of the scores of standard poses. Using our method, the user can be redirected imperceptibly to the optimal pose with the best score among all the reachable poses from the user’s current pose during walking. Experiments demonstrate that our method outperforms state-of-the-art methods in various environment sizes and obstacle layouts. =====================================================================================Bullet Comments for 360° Video [2022 IEEE Conference on Virtual Reality and 3D User Interfaces (IEEE VR)]

Time-anchored on-screen comments, as known as bullet comments, are a popular feature for online video streaming. Bullet comments reflect audiences’ feelings and opinions at specific video timings, which have been shown to be beneficial to video content understanding and social connection level. In this paper, we for the first time investigate the problem of bullet comment display and insertion for 360° video via head-mounted display and controller. We design four bullet comment display methods and evaluate their effects on 360° video experiences. We further propose two controller-based methods for bullet comment insertion. Combining the display and insertion methods, the user can experience 360° videos with bullet comments, and interactively post new ones by selecting among existing comments. =====================================================================================Content-preserving image stitching with piecewise rectangular boundary constraints [IEEE TVCG 2020]

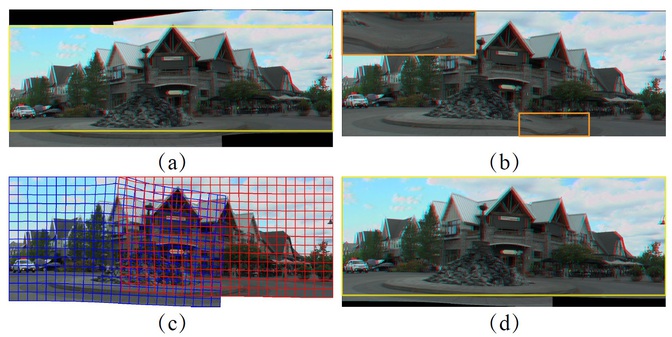

This paper proposes an approach to content-preserving image stitching with regular boundary constraints, which aims to stitch multiple images to generate a panoramic image with a piecewise rectangular boundary. Existing methods treat image stitching and rectangling as two separate steps, which may result in suboptimal results as the stitching process is not aware of the further warping needs for rectangling. We address these limitations by formulating image stitching with regular boundaries in a unified optimization. Starting from the initial stitching results produced by the traditional warping- based optimization, we obtain the irregular boundary from the warped meshes by polygon Boolean operations which robustly handle arbitrary mesh compositions. By analyzing the irregular boundary, we construct a piecewise rectangular boundary. Based on this, we further incorporate line and regular boundary preservation constraints into the image stitching framework, and conduct iterative optimization to obtain an optimal piecewise rectangular boundary. Thus we can make the boundary of the stitching results as close as possible to a rectangle, while reducing unwanted distortions. We further extend our method to video stitching, by integrating the temporal coherence into the optimization. Experiments show that our method efficiently produces visually pleasing panoramas with regular boundaries and unnoticeable distortions.

=====================================================================================

Visual Object Tracking in Spherical 360° Videos: A Bridging Approach [IEEE IVCNZ 2020]

We present a novel approach for adapting existing visual object trackers (VOT) to work for equirectangular video, utilizing image reprojection. Our system can easily be integrated with existing VOT algorithms, significantly increasing the accuracy and robustness of tracking in spherical 360° environments without requiring retraining. Our adapted approach involves the orthographic projection of a subsection of the image centered around the tracked object each frame. Our projection reduces the distortion around the tracked object each frame, allowing the VOT algorithm to more easily track the object as it moves.==========================================================================

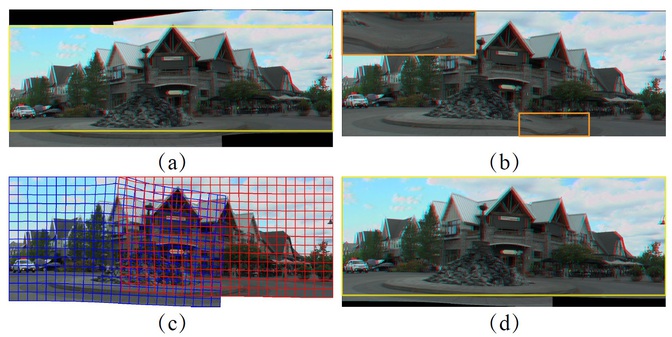

Stereoscopic Image Stitching with Rectangular Boundaries [CGI 2019, download the paper]

This paper proposes a novel algorithm for stereoscopic image stitching, which aims to produce stereoscopic panoramas with rectangular boundaries. As a result, it provides widereld of view and better viewing experience for users. To achieve this, we formulate stereoscopic image stitching and boundary rectangling in a global optimization framework that simultaneously handles feature alignment, disparity consistency and boundary regularity. Given two (or more) stereoscopic images with overlapping content, each containing two views (for left and right eyes), we represent each view using a mesh and our algorithm contains three main steps: Werst perform a global optimization to stitch all the left views and right views simultaneously, which ensures feature alignment and disparity consistency. Then, with the optimized vertices in each view, we extract the irregular boundary in the stereoscopic panorama, by performing polygon Boolean operations in left and right views, and construct the rectangular boundary constraints. Finally, through a global energy optimization, we warp left and right views according to feature alignment, disparity consistency and rectangular boundary constraints. To show the effectiveness of our method, we further extend our method to disparity adjustment and stereoscopic stitching with a large horizon.

| I | Attachment | Action | Size | Date | Who | Comment |

|---|---|---|---|---|---|---|

| |

6182.mp4 | manage | 91 MB | 12 Oct 2022 - 11:58 | Main.fanglue | |

| |

6182.pdf | manage | 3 MB | 30 Sep 2022 - 16:26 | Main.fanglue | |

| |

stereo.jpg | manage | 369 K | 21 Apr 2019 - 17:14 | Main.fanglue | |

| |

template_CGIconf(1).pdf | manage | 6 MB | 21 Apr 2019 - 17:12 | Main.fanglue |