Previously at the Festival of Doubt:

This page lists all the talks, discussions, dogmatic rantings, collective sharings, and bunfights, since records began in 2002.2017

| When | Topic |

|---|---|

| 1 Feb | The CarlSIM Spiking neural net simulator : Will Browne |

| 8 Feb | Anomalies are not outliers : Alex Telfar |

| 8 Mar | Brainstorming how FoD works : everyone |

| 22 Feb | Monotone Operators : Bastiaan Kleijn |

| 22 Feb | A Multi-scale Consensus Model for Low-level Vision : Todd Zickler |

| 15 Mar | A bit about tensorflow : Mashall |

| 22 Mar | The Shattered Gradients Problem (if resnets are the answer, then what is the question?) : David Balduzzi |

| 29 Mar | Reputation and the origin of money : Marcus |

| 5 Apr | That kernel trick : Kurt Wan-Duo Ma |

| 12 Apr | Tensorflow vs PyTorch : Nico Despres |

| 19 Apr | Demo for Raspberry Pi : Kurt |

| 10 May | Mutual information and all that : Steven (drjellyman?) |

| 17 May | GANs (Generative Adversarial Networks) : Hamed Sadeghi |

| 24 May | Intro to Variational Inference via Normalising Flows : Mashall |

| 31 May | I've been thinking about... : Shima and Harry |

| 7 Jun | Thoughts on ML : David Balduzzi |

| 14 Jun | Planning session choosing ICML papers to read : everyone |

| 21 June | "Failures of Gradient-Based Deep Learning" : all-in |

| 28 June | "Sharp Minima Can Generalize For Deep Nets" : Alex Telfar |

| 5th July | Variational Boosting https://arxiv.org/abs/1611.06585 : Todd Zickler |

| 12th July | Deep CORAL: Correlation Alignment for Deep Domain Adaptation https://arxiv.org/abs/1607.01719 : Kurt |

| 19th July | Recurrent highway networks : Harry Ross |

| 26th July | Convolutional Sequence to Sequence Learning with Wavenets : Paul Mathews |

| 2nd Aug | GANs and stuff : Hamed |

| 9th Aug | ICML on, no FoD |

| 16th Aug | Paul Rubenstein (student of Carl Rasmussen and |

| 23rd Aug | ICML hotness : Marcus and Kurt |

| 30th Aug | RKHS and HSIC : Felix and Kurt |

| 6th Sept | clash with Prof Martin Butz seminar "From Predictive Sensorimotor Processing to Event-Oriented Conceptualizations" |

| 13th Sept | Catchup with everyone who has been away |

| 27th Sept | "Variational Inference using Implicit Models" https://arxiv.org/pdf/1702.08235.pdf : Mashall |

| 4th Oct | More on Implicit Models : Mashall |

| 11th Oct | The symbol grounding problem, with Prof Mark Steedman (Edinburgh) |

| 18th Oct | "Duality of Graphical models and tensor networks" (Robeva and Seigal) : Alex |

| 22 Nov | Use of Prior Information for Ultra-efficient Imaging: Paul |

2016

| When | Topic |

|---|---|

| 14 Jan | The difficulty of "explaining away" inference in neural nets : Marcus |

| 21 Jan | Questions about hand tracking using deepish nets : Kurt |

| 04 Feb | Strongly-Typed Recurrent Neural Nets : David Balduzzi |

| 11 Feb | "Hi Mark", the daftness of precision-recall curves, what Pareto meant, and so on |

| 18 Feb | Will had a notion |

| 3 Mar | A measure of consciousness: David Balduzzi |

| 10 Mar | Carving nature at the joints : Marcus Frean |

| 17 Mar | 5 minutes each on "Something I learned this week was..." |

| 31 Mar | Informal discussion |

| 7 Apr | Tony on Tenenbaum and Freeman's "Separating Style and Content with Bilinear Models" |

| 14 Apr | Alex on tSNE |

| 5 May | Michael Radich on modelling author attribution anomalies in the Chinese Buddhist canon |

| 12 May | "5 minutes of what did I learn this week" |

| 18 May | JP: Randomised Linear Algreba |

| 25 May | JP, David, Marcus on various ideas about learning from residuals (ResNets, Gradient Boosting, Upstarts) |

| 8 June | Alex and Paul : everyone install and get going with tensorflow |

| 15 June | Eigen means characteristic |

| 22 June | "5 minutes of what did I learn this week" |

| July 6 | Disentanglement, and Ideas for pet ML Course |

| July 13 | David: ICML debrief |

| July 20 | Elisenda: Emergence of barter |

| July 27 | Alex: how autograd works |

| Aug 3 | Marcus: thermal perceptrons |

| Aug 10 | Around the table, 5 mins on something you're doing |

| Aug 17 | Tony: RMSProp better in a weird way |

| Aug 24 | Alex: discuss "Decoupled neural interfaces using synthetic gradients" |

| Aug 31 | Bastiaan: convex optimization neural network |

| Sept 7 | Mashall: Gaussian process vs Kalman filter |

| Oct 10 | Marcus and Tony: disentangling messed-up causes |

| Nov 16 | Matt O’Connor : consensus optimization |

| Nov 30 | Marcus: autoencoding without an encoder |

2015

| When | Topic |

|---|---|

| 9 April | "Hi David" : David Balduzzi |

| 16 April | PGMs : Paul Teal |

| 23 April | What are the options for getting errors bars on predictions? : Mashall Aryan |

| 30 April | Random Bits Regression : JP Lewis |

| 14 May | Probabilistic Back Propagation : Mashall Aryan |

| 21 May | Kingma & Welling's "Variational Autoencoders" : David Balduzzi |

| 28 May | Alpha matting : William |

| 4 June | Finding diffuse sources in astronomical images : Tony Butler-Yeoman |

| 11 June | Free-Energy-meets-LCS paper : Will Browne |

| 18 June | AlexNet : Gif |

| 17 July | A Topic in machine learning : Mashall Aryan |

| 24 July | multiple-cause RBMs : Marcus |

| 31 July | Conjugate gradients : JP Lewis, Tony Butler-Yeoman, Marcus Frean |

| 6 August | deConv nets : Gif |

| 20 August | Saddle points : Marcus Gallagher, UQ |

| 27 August | Discussion... : Marcus Gallagher, UQ |

| 3 September | ADMM : Arian Miralavi |

| 10 September | Reservoir Computing : Leo Browning |

| 24 September | discussion of a draft paper (Semantics, Representations and Grammars for Deep Learning) : David Balduzzi |

| 1 October | Consciousness, strong AI, functionalism : Marcus Frean |

| 8 October | CMA-ES works, but how? : Will Browne |

| 15 October | De-camped to the consciousness seminar over in Philosophy |

| 22 October | More about the details of CMA-ES : Will Browne |

| 29 October | No meetup this week - too many people away |

| 17 November | Social install / play with TensorFlow |

| 26 November | Discussion of fast Gaussian process inference |

| 3 December | Further discussion of Gaussian processes |

| 10 December | Discussion of Lagrange multipliers, generalisations etc : Bastiaan |

2014

| 30 July | Learning hinges : David |

| 7 Aug | How to explain Gaussian processes : JP |

| 14 Aug | Falsification : David |

| 21 Aug | Deep planning : Marcus |

| 4 Sept | Pulling faces : JP |

2013

| When | Topic |

|---|---|

| 25 Feb | The problem of induction: Hume, Popper, and Ockham-via-Bayes |

| 8 March | Deep learning |

| 22 March | The Blue Brain project : Praveen |

| 5 April | informal discussion of a Topic of Interest |

| 19 April | The prospects for detecting characteristic virus "crystals" in electron micrographs: Marcus |

| 26 April | I can't remember the topic. Was it MML? |

| 10 May | Farewell for Anna Friedlander |

| 17 May | Mona: practice talk for her phd proposal seminar |

| 6 Aug | Science of music |

| 13 Aug | ASMR + anticipated perception |

| 20 Aug | 16000 cores for 3 days on 200 million random YouTube images... deep learning of "face cell" etc - reportage here |

| 27 Aug | Time rotation and free will |

| 3 Sept | Participation in the Visualising Correspondence Networks "hackfest" for digital history, run by Sydney Shep |

| 10 Sept | Uniqueness and Legacy |

| 1 Oct | Discussion of a Topic of Interest |

| 8 Oct | Praveen: fast sparse particle filtering |

| 15 Oct | Incentivizing good governance |

2012

| When | Topic |

|---|---|

| 10 Dec | Marcus: Stag hunting |

| 3 Dec | Mona and Praveen: RVM and SVM |

| 19 Nov | Anna: strategies for binning pixel intensities in radio astronomy images |

| 5 Nov | Marcus and Praveen: an idea for multi-target tracking |

| 29 Oct | Mona: sparsity and the Relevance Vector Machine |

| 8 Oct | More on Functionalism vs Dualism |

| 24 Sep | Discussion: Functionalist theories of mind |

| 10 Sep | Discussion of Sophie Deneve's ideas (mf) |

| 3 Sept | Around the table: Praveen's weighted clustering idea, Mona's relevence vector machine issue, Anna's scoring of bounding boxes |

| 27 Aug | How to prepare the (PhD) proposal document |

| 20 Aug | More on reinforcement learning (mf) |

| 13 Aug | Introduction to reinforcement learning (mf) |

| 6 Aug | Discussion: what is unsupervised learning, really? |

| 30 July | Anna: introduction to topic models / latent Dirichlet allocation (LDA) |

| 23 July | "What is the most challenging part of machine learning?" |

| 16 July | Bhakta: "no brainstorming please!". A general discussion of Gaussian processes (different views of). |

| 9 July | Discussion: a potentially good fit for Gaussian processes and active vision |

| 25 June | brainstorm the general problem of visual "attention" with everyone |

| 18 June | Discussion (A+M) of strategies for setting bins in histograms of image pixel intensity |

| 11 June | More about the Jeffreys prior - we went over the "bent coin" example |

| 30 May | priors: improper, conjugate, and uninformative. (The Jeffrey's prior and the apparent rationality of negative pseudocounts...) |

| 23 May | We mainly talked about Haar wavelets and ToktamEbadi's Problem: using them to classify digits of unknown rotations |

| 16 May | Why do we max likelihood of parameters in a Gaussian Process? |

| 9 May | PGM stuff - we talked about supervised/unsupervised learning and how that was reflected in the graph. Discussed but didn't resolve why 'features' should be independent if possible - surely there's a PGM perspective on that? |

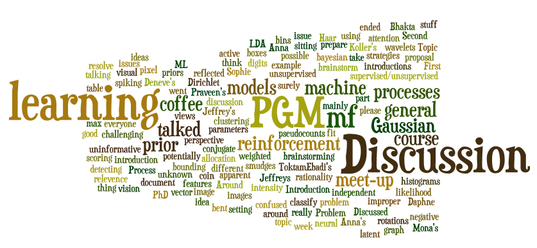

| 2 May | Discussion around Daphne Koller's PGM course, which we're all sitting in on |

| 25 April | "the thing you're most confused about in ML, this week"... I think we ended up talking about Anna's images and the issues with detecting smudges in them |

| 18 April | Second meet-up: let's all take the PGM course at http:www.coursera.org |

| 5 April | First meet-up: coffee, introductions |

2010

| When | Who | Topic |

|---|---|---|

| 18 Feb | Keith Cassell | Ask Peter Norvig Anything (without Peter Norvig). Conducted under Keith's Rules of Disorder |

2009

| 26 Nov 2009 | Peter Andreae | Vietnamese Document Representation and Classification (practice talk for AI09) |

| 12 Nov 2009 | Miles Thompson | Understanding money on the social network |

| 1 Oct 2009 | Peter Andreae | Learning to act in a complex world |

| 6 Aug 2009 | Sergio Hernandez | How to predict the past using future imprecise information |

| 14 May 2009 | Mengjie Zhang | There Is a Free Lunch for Hyper-Heuristics, Genetic Programming and Computer Scientists |

| 30 Apr 2009 | Marcus Frean | Restricted Boltzmann Machine II |

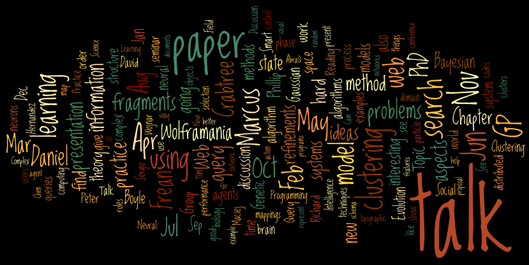

| 16 Apr 2009 | Daniel Crabtree | Restricted Boltzmann Machine |

| 2 Apr 2009 | Will Smart | A mathematical model for visual beauty |

| 19 Mar 2009 | Richard Proctor | Using information theory to improve music theory |

| 12 Feb 2009 | Social Evolution Reading Group | Casual discussion |

| 5 Feb 2009 | Marcus Frean | Solving expensive parameter optimization problems, without going broke |

| 29 Jan 2009 | Adam Clarke | Exploring the space between low-level sensory inputs and high-level concepts |

| 22 Jan 2009 | Social Evolution Reading Group | Sexual selection and Fisher's runaway process |

| 15 Jan 2009 | Keith Cassell | Applying the concepts of social network analysis to software engineering |

| 8 Jan 2009 | Social Evolution Reading Group | Price's equation applied to Group Selection |

2008

| When | Who | Topic |

|---|---|---|

| 18 Dec 2008 | Kourosh Neshatian | In Search of Intelligent Aliens: On Scalability of Intelligence |

| 11 Dec 2008 | Social Evolution Reading Group | animal communication and honest signaling |

| 4 Dec 2008 | no discussion | nb. most staff away at AI'08 |

| 27 Nov 2008 | Will Smart | Will's neat idea for a robot -- Mechanical worms and snakes |

| 20 Nov 2008 | Tudos Gentes | We talked about honest signals, and Fisher's runaway process |

| 13 Nov 2008 | Social Evolution Reading Group | altruism and inclusive fitness |

| 6 Nov 2008 | Dirk Derom | METANEVA - a tool for neuroinformatics |

| 30 Oct 2008 | Social Evolution Reading Group | basics of modeling evolution as variation + selection, basics of games |

| 23 Oct 2008 | Maciej Wojnar | Artificial Intelligence vs Artificial Minds, discussion of the Technological Singularity |

| 15 OCt 2008 | Keen Persons | Meeting to talk about FoD Reanimated |

| 2 May 2008 | Sergio Hernandez | The Population-based particle filter |

| 5 Feb 2008 | Rene Doursat | Architectures That Are Self-Organized and Complex: From Morphogenesis to Engineering |

2007

| When | Who | Topic | Abstract |

|---|---|---|---|

| 29 Oct 2007 | Daniel Crabtree | Understanding Query Aspects with applications to Interactive Query Expansion | This is a practice talk for a paper that I am presenting at WI 2007 in Fremont, California in a few days time. The abstract of the paper follows. For many hard queries, users spend a lot of time refining their queries to find relevant documents. Many methods help by suggesting refinements, but it is hard for users to choose the best refinement, as the best refinements are often quite obscure. This paper presents Qasp, an approach that overcomes the limitations of other refinement approaches by using query aspects to find different refinements of ambiguous queries. Qasp clusters the refinements so that descriptive refinements occur together with more obscure and potentially better performing refinements, thereby explaining the effect of refinements to the user. Experiments are presented that show Qasp significantly increases the precision of hard queries. The experiments also show that Qasp’s clustering method does find meaningful groups of refinements that help users choose good refinements, which would otherwise be overlooked. |

| 28 Sep 2007 | Sergio Hernandez | PhD Seminar practice talk | Sensor fusion problems occur when multiple sensors are capturing information from an observed environment. Multiple sensors can provide more accurate estimates than their single counterpart, but it is hard to combine the whole information to form a single estimate. The measurements usually have some level of internal noise as well as environmental uncertainty. Combining all the information gathered so far is a challenging computational problem, that can be even more difficult when the environment is non-stationary and the model dimension is also unknown. This PhD thesis will develop a Bayesian method for calculating the probability of the current state of a system from noisy observations, when the dimensionality of the model is changing. The method proposed uses point process theory for the time-varying number of observations and sequential Monte Carlo methods for estimating the system state. |

| 27 Jul 2007 | Daniel Crabtree | Exploiting Underrepresented Query Aspects for Automatic Query Expansion | This is a practice talk for a paper that I am presenting at KDD 2007 in San Jose in early August. The abstract of the paper follows. Users attempt to express their search goals through web search queries. When a search goal has multiple components or aspects, documents that represent all the aspects are likely to be more relevant than those that only represent some aspects. Current web search engines often produce result sets whose top ranking documents represent only a subset of the query aspects. By expanding the query using the right keywords, the search engine can find documents that represent more query aspects and performance improves. This paper describes AbraQ, an approach for automatically finding the right keywords to expand the query. AbraQ identifies the aspects in the query, identifies which aspects are underrepresented in the result set of the original query, and finally, for any particularly underrepresented aspect, identifies keywords that would enhance that aspect’s representation and automatically expands the query using the best one. The paper presents experiments that show AbraQ significantly increases the precision of hard queries, whereas traditional automatic query expansion techniques have not improved precision. AbraQ also compared favourably against a range of interactive query expansion techniques that require user involvement including clustering, web-log analysis, relevance feedback, and pseudo relevance feedback. |

| 22 Jun 2007 | Jason Xie | Practice Talk for GECCO 2007 | Talk 1: An Analysis of Constructive Crossover and Selection Pressure in Genetic Programming Talk 2: Another Investigation on Tournament Selection: modelling and visualisation |

| 15 May 2007 | Daniel Crabtree | QC4 - A Clustering Evaluation Method | This is my practice talk for PAKDD 2007 where I will be presenting this paper next week. Its about evaluating clustering algorithms. Practically nothing about web page's or web search in this talk. But obviously there are some web examples thrown in there for good measure and of course the ubiquitous Jaguar example you are probably all too familiar with by now. |

2006

| When | Who | Topic | Abstract |

|---|---|---|---|

| 7 Dec 2006 | Daniel Crabtree | Query Directed Web Page Clustering | A practice talk for the paper "Query Directed Web Page Clustering", which is a paper that I am presenting at Web Intelligence 2006 in Hong Kong at Christmas. It's an interesting clustering algorithm that gets very good performance. The presentation is to be 20 minutes long. |

| 17 Nov 2006 | Yun | A generic model of knowledge transfer, incremental machine learning, and recommender systems | The practice of my Phd proposal talk. |

| 13 Oct 2006 | Sergio Hernandez | Discussion of "Bayesian-optimal design via interacting particle systems". | I've found this very interesting paper that I think it's the future of computing |

| 4 Aug 2006 | Marcus Frean | Formation and recovery of topographic mappings in the brain | I will describe a model for the formation of topographic mappings in the brain that incorporates Eph-ephrin signalling and is able to account for earlier experiments involving expansion and contraction of the map following surgical interventions. Robustness of the map is achieved by invoking (a) regulation of ephrin expression in tectal cells that are innervated from the retina, and (b) smoothing of ephrin levels in the tectum via a local diffusion process. |

| 30 Jun 2006 | Huayang Xie | Practice Talk for CEC2006: gpGP: good predecessors in Genetic Programming | |

| 9 Jun 2006 | Marcus Frean | Discussion on particle filtering | Discussion around the super-basics of particle filtering, as I understand it - see readings/PFmorons.pdf . |

| 26 May 2006 | Sergio Hernandez | Where does the mind stop and the rest of the world begin? | It is well known that bayesian methods are not so straightforward in the pattern recognition framework. For this reason, lots of different techniques has arised in order to solve the untractability and the parameter estimation in sequential learning for general state space models. This talk will define some approaches and hopefully will explore new research directions for this problem. |

| 19 May 2006 | Gareth Baxter | How to win friends and influence people: the dynamics of language change. | An introduction to the Language Change modelling I did for my PhD, and am attempting to continue... |

| 5 May 2006 | Will Smart | Unifying dynamic sequences (fragments) and mechanical analogies (in genetic programming). | Mock for the PhD proposal seminar I will give to a slightly less critical audience on the 11th at 11. This will be of interest to anyone who either wishes to see how the great dream of the GP building block hypothesis may finally be seen in all its awesome glory, or wishes to find out how it may be shown that it fails where one may expect it to hold firm. Also those who enjoy GP, schema theory, fragments, the origami of absurdly complex algorithms, or picking holes in hard-earned research may be interested. I will talk on fragments in GP; a fragment is a connected subgraph of a program tree. I plan to explore how fragments, as schemas, propagate through evolution in the remainder of the PhD. Using tools I am developing, fragments in populations and evolutions of GP will be identified. Analysis of the fragments will lead to greater knowledge of the accuracies of the GP building block hypothesis and GP schema theory. It is also predicted that intelligent use of fragments will also lead to better GP. |

| 7 Apr 2006 | Cam Skinner | Mutation, Schmutation. | Mutation shown to be harmful: random search is better than mutation in GA's |

| 31 Mar 2006 | Jason Xie | Practice Talk for EuroGP: population clustering and GP for stress detection. | |

| 24 Mar 2006 | Cam Skinner | Informal presentation on Mutation in GAs | Why random search is better than mutation in GA's Followed by a flame session led by Maciek, if Cam runs out before 4.45pm |

| 2 Mar 2006 | Daniel Crabtree | Improving Web Page Clustering with Global Document Analysis | This is just a short talk (15 minutes), it is going to be a practice for a talk I am giving at a Workshop in Auckland on Saturday. I would like to have comments on the talk and get any last minute ideas for improving it. After the talk, I can discuss the work further and elaborate on anything that interests anyone or if no-one is interested, it'll be a very short FoD. Here is the abstract for the talk: Web page clustering methods categorize and organize search results into semantically meaningful clusters that assist users with search refinement. Finding clusters that are semantically meaningful to users is difficult. In this presentation, I describe a new web page clustering algorithm that chooses clusters that more closely relate to the user's query. The algorithm uses term co-occurence statistics to construct feasible clusters, which are merged, ranked, and selected using an heuristic model of web page clustering usability. The performance of the new algorithm is evaluated and compared against other algorithms and a significant performance improvement is achieved over the other clustering algorithms. |

| 24 Feb 2006 | Maciej Wojnar | Practice run for PhD proposal seminar | I'm doing a practice talk for my PhD proposal seminar. My PhD is about developing an intelligent agent that is effective in the human domain. Specifically, it is about learning effective Goal Decomposition rules. |

2005

| When | Who | Topic | Abstract | |

|---|---|---|---|---|

| 18 Nov 2005 | Phillip Boyle | Bayesian Model Comparison - Calculating the Evidence | Overview on the use of probabilistic evidence to select between alternate models or hypotheses. On paper this is clear cut, but the hard part is computing the evidence, which amounts to numerical integration. I'll look a annealed importance sampling, which is a method that can do this without having to free up 75 years of computer time. | |

| 4 Nov 2005 | Will Smart | Empirical Schema Theory Validation by Finding Common Program Substructures | The topic will be some things I have been doing for my PhD, dealing with repeated code in Genetic Programming (GP). I have been empirically finding common "fragments" of programs, and the talk will be on: * Some previous related material, including schema theory in GAs, GP; * What are fragments in GP programs? * How do we find fragments? * Some things we can do with fragments. Time permitting, I will be describing the problem domain I have recently been using: Object Trackers/Detectors/Classifiers. | |

| 28 Oct 2005 | Maciej Wojnar | The Flight of Icarus | Earlier this year, I had several important ideas about agents that act in interesting worlds. In this talk, I'm going to talk about Icarus, a system from 5 years ago that stole my ideas and messed them up. I'll talk about the challenges of creating an agent that can act intelligently, the problems that planning and reacting agents have, and teleoreactive agents that try to avoid these problems by integrating planning and reacting. I'll then describe Icarus, an implementation of a teleoreactive agent, and discuss its limitations and the extensions it requires. | |

| 28 Oct 2005 | Mike Paulin (Otago) | The Neural Particle Filter: A model of neural computations for dynamical state estimation in the brain | Recent experimental work in collaboration with Larry Hoffman at UCLA has shown that, as a consequence of fractional order dynamical characteristics of vestibular sensory transduction mechanisms, single spikes generated by vestibular motion-sensing neurons can be regarded as measurements of the dynamical state of the head. We hypothesize that this measurement is translated into an explicit Monte Carlo representation in the brainstem vestibular nucleus, which forms a central map of head state. In this representation, neural spikes are regarded as particles and their spatial distribution over the map at any instant represents the brain's knowledge of head state. Particles are constrained to move along axons, corresponding to pre-defined state trajectories. A network can be constructed so that the distribution of spikes in the map approximates the Bayesian posterior distribution of states given the sense data. The neural particle filter model generates the circuit topology and response properties of real neurons in the brain, from purely statistical principles. See Mike's recent paper for the details. | |

| 21 Oct 2005 | Daniel Crabtree | A new approach to sandwiches. | My report on the WI/IAT 2005 international conference. This talk is going to be a summary and overview of interesting ideas, projects, and papers that I can remember about from at the conference. Additional ideas from people that I talked to are likely to be included. This talk is likely to be exceptionally light on technical details - very much in contrast to most of the actual talks at the conference. BTW: I have chosen to keep the automagically generated title. | |

| 14 Oct 2005 | Zbigniew Michalewicz (Adelaide) | Open discussion | This follows Zbyszek's seminar to the School earlier in the day. Prof Michalewicz is one of the big names in the area of evolutionary computing (together with John Holland, David Fogel, John Koza, etc.). He has chaired a large number of international conferences in AI, particularly evolutionary computing. He is the author of over 200 research publications, including some famous books, such as "Genetic algorithms + Data Structures = Evolutionary Programs" and "How to Solve It: Modern Heuristics". | |

| 7 Oct 2005 | Mengjie Zhang | New kinds of negative social processes (auto-generated). | I am going to discuss some issues in genetic programing, linked to classification and evolutionary computing. | |

| 30 Sep 2005 | Marcus Frean | On the optimisation of passive strategies using integration (auto-generated). | I'm going to attempt to draw an analogy between predictive coding and topographic mappings. Your job will be to decide if this profound insight is (a) entirely spurious, or (b) merely a waste of time. The talk will be a boundary case on the "preparedness" dimension. | |

| 23 Sep 2005 | Phillip Boyle | 100 attempts to find 8 numbers that balance 2 poles | Introduction to and demonstration of an efficient method to find controllers that balance two poles on a cart. The algorithm infers a fitness surface over controller space and uses that to guide its search. I offer an apology in advance to those with a pathological dislike of MATLAB and the 92% of you who don't want to hear the word "Gaussian" more than once per hour. | |

| 16 Sep 2005 | Russell Tod | Discussion of distributed phase codes. | ||

| 2 Sep 2005 | Daniel Crabtree | Web Clustering - New Scoring and Selection Methods, New Evaluation Method | I will give: a 20 minute presentation on a new scoring method and a new cluster selection method. a 10 minute presentation on a new evaluation method. These are two talks that I will be giving at the Web Intelligence Conference in a week or so. So these will be practice runs. Please ask questions and help me sort out any problems with these talks. | |

| 26 Aug 2005 | David MacKay (Cambridge) | Distributed Phase Codes for Associative Memory, Prediction, and Latent Variable Discovery | A distributed phase code represents objects by the times of neuronal action potentials in a large number of neurons. If the object has instantiation parameters (for example, scale and pose, in the case of visual objects), the timings and probabilities of the action potentials are smoothly-varying functions of those parameters. If multiple objects are present, their associated action potential patterns are simply superposed in the distributed phase code. We present simple learning rules that allow distributed phase codes to instantiate associative memory and prediction. The resulting system can store and recall continuous-valued memories, singly or concurrently. Point attractors, line attractors, and manifold attractors are all learned by the same rules. Similar recursive learning rules take distributed phase codes for elementary objects and produce distributed phase codes for higher-order objects. Short Bio: David MacKay is a Professor in the Department of Physics at Cambridge University. He obtained his PhD in Computation and Neural Systems at the California Institute of Technology. His interests include machine learning, reliable computation with unreliable hardware, the design and decoding of error correcting codes, and the creation of information-efficient human-computer interfaces. |

|

| 19 Aug 2005 | Yun Zhang | Polly | A soft polynomial network based learning system. | |

| 12 Aug 2005 | Richard Mansfield | Rock-paper-scissors | A model for the emergence of intransitive competition in biology | |

| 5 Aug 2005 | Xiaoying Sharon Gao | learning patterns for information extraction from web pages | I will briefly introduce the projects I am currently supervising and then talk about some recent research on learning patterns for information extraction from Web pages. | |

| 29 Jul 2005 | Daniel Crabtree | Web Clustering - A Sneak Peak | I will give a sneak peak into the content of two papers that I've had accepted at the Web Intelligence conference. The full presentation of these with slides will come in a few weeks. This sneak peak is just to introduce some of the interesting ideas in both papers, so I have a feeling for the type of content to include in my actual presentation. I will follow that with some sort of demonstration of my clustering system. I'm pretty sceptical as to whether a live demonstration of any new searches can be done due to time constraints, but there are a few prepared searches to look through. At the start of the talk we will decide on a 1 word search and try to have it ready to view by the end of the talk, so bring ideas for that. | |

| 22 Jul 2005 | Maciej Wojnar | Exploiting Structure when Generalizing | I'm going to talk about a couple of "not fully baked" ideas I've had. I'm most interested in trying to achieve the goal of developing an autonomous, intelligent agent that can act effectively in the human domain. In the talk I'll define what I mean by that and explain why I am pessimistic about current methods leading to this goal. The world is a very structured domain and I believe that any algorithm for generalizing that scales to this domain will need to exploit that structure. To keep the talk grounded and not too fluffy I'll talk about some AI systems that exploit structure: a little program I wrote for solving Rubik's cube (fun to watch) and a less trivial program that can make a cup of coffee efficiently in a complex, relational, partially-observable world (not as fun to watch). I'll talk about SOAR (a system that unfortunately is very similar to my one), chunking, explanation based learning, and why I think they are dodging the real issue. I'll also talk about (if I have time) David Andreae's PhD thesis program that exploits structure to generalize images. The talk is going to be informal. | |

| 8 Jul 2005 | Will Smart | Science using art created with science | In GP we use genetic programs, but what do they look like? In this talk I will demonstrate a real-time raytracing renderer for programs that I have made. The output is stunning, who would have known the wacky shapes (in feature space) that GP uses all the time? Aside from looks, the renderer has things to say about the way GP works, such as the role of functions in GP and causes of early convergence. | |

| 1 Jul 2005 | Phillip Boyle | Linear combinations of random features | This is a follow up to the talk Marcus gave on the liquid state machine. I'll be looking at the advantages of learning a linear combination of random features, instead of learning the features themselves. (Here, a feature is just a non-linear transformation of input space). Advantages include convexity (no local minima) and analytic solutions (no MCMC or nonliear optimisation required). Disadvantages (damn it) will be flippantly glossed over, and then seriously alluded to, and finally embraced in their entirety. | |

| 30 Jun 2005 | Huayang Xie | Practice Talk for CEC2006 | gpGP: good predecessors in Genetic Programming | |

| 24 Jun 2005 | Marcus Frean | factor graphs, probability propagation, and all that. | A dry run of a talk I'll be giving next week in Wanaka at the Hidden Markov Models workshop. Here is the draft presentation as a PDF | |

| 17 Jun 2005 | Marcus Frean | In praise of senseless arbitrary complexity. | Last week Mukhlis's talk generated an interesting discussion that I propose we continue, because it's a subject that seems to come up over and over again. I'd like to kick things off by defending (apparently) senseless arbitrary complexity. I'll mention Neal's result relating Bayesian NNs to Gaussian processes, then SVMs, followed by a brief description of a spiking neural model due to Maass et al. The paper I'm discussing is available as #148 from Wolfgang's website (it's in press at J. of Physiology). But I'd really like to see someone (else) tackle #165 sometime soon. Any takers? | |

| 10 Jun 2005 | Mukhlis | Review of a paper by Chen and Chen | We reviewed "Toward an evolvable neuromolecular hardware: a hardware design for a multilevel artificial brain with digital circuits", Jong-Chen Chen and Ruey-Dong Chen, Neurocomputing 42 (2002) 9-34. | |

| 3 Jun 2005 | Yun Zhang | Poly & prior schema. | The talk will present a couple of ideas in my Masters (on inductive logic programming) -- Polly and Prior Schema. Polly is for reducing the complexity from exponential to polynomial. Prior is to do with our sort of training examples. They turn out to depend on each other so I will present them both. Specifically they have 100% to do with probabilities, 10% with information theory, 50% with neural networks, 10% with belief nets, 30% with logics, 20% with philosophy, and 5% with version space (some normalisation is required). | |

| 27 May 2005 | Russell Tod | Spiking neuron models for control. | The talk will present results from my honours project, which investigates realistic models for biological neurons by embedding them in simulated physical agents. These agents attempt to control simple dynamical systems well - for example balancing poles, avoiding obstacles, and the pursuit and evasion of other agents. Their performance on these tasks highlights certain aspects of these realistic neurons compared to the usual "neurons" found in neural nets. | |

| 6 May 2005 | Ryan Woodard | Memory. | Is memory the same process in plasmas, SOC models and brains? | |

| 29 Apr 2005 | Marcus Frean | GAs to go (can I get fries with that?) | This talk will outline A Topic in Computer Science, or similar. Pondy has agreed to provide interjections. (in fact it was about density modelling using flow-field information from a camera mounted on a car...) | |

| 22 Apr 2005 | Chris Brookes (SES) | Lost in space: 7 reasons why geography is hard. | "Nearly everything happens somewhere, and where it happens matters". People understand this intuitively in everyday life but increasingly, with the support of computers and information systems, people are applying geography to make major decisions about managing the world we live in. So geography is important. Unfortunately it is also hard. In this seminar I will introduce some fundamental geographic problems, show why they are hard, and talk about some of the attempts to tackle them using computation. Geocomputation is a relatively new field that has developed from a fusion of Geographical Information Systems and computational methods, and makes significant use of novel techniques such as cellular automata, genetic algorithms, neural networks and multi-agent simulations. Anyone with an interest in spatial questions or any computer scientist looking for a real application is welcome to join the discussion. | |

| 15 Apr 2005 | Phillip Boyle | Which way is up? | What's the best way to estimate the gradient of a noisy function? Among other things, we might want to do this to help solve stochastic optimisation problems. Simple methods use finite differences. Other methods find interpolating models, and then differentiate the model. The method I have here fits a Gaussian Process model around the point at which you want the gradient. Fortunately, the derivative of a Gaussian Process is itself a Gaussian Process - and this has some helpful consequences. I'll give some examples of this, and try to explain how it works. | |

| 8 Apr 2005 | Daniel Crabtree | The problems of searching the web, web clustering, and possible solutions. | This will be an informal talk about the problems that exist with search the web, the capabilities of web clustering and how far it currently goes in addresses the problems of searching the web, and my possible solutions for how the remaining problems could be solved. The talk will start with a brief look at the big picture and the ultimate goal of search and more specifically the ultimate way of obtaining information. | |

| 1 Apr 2005 | Maciej Wojnar | Planning as Communication. | I'm going to talk about a paper I read that presents an interesting approach to planning. | |

| 23 Mar 2005 | Will Smart | Communal Binary Decomposition for Multiclass object classification. | A trial run of his EuroGP presentation. | |